Chapter 1: Introduction to Prompt Engineering

What is Prompt Engineering?

Prompt Engineering is an art of using intelligent instrument that understands exactly how to achieve its goals in a given context. Instead of venturing out to uncover new things, we concentrate on formulating commands for advanced computer programs called AI models, allowing them to interpret our needs and deliver the responses we require.

History of Prompt Engineering

Imagine you have a smart friend(or any smart person u know) who act like autobot who can answer any question you ask, but it's a bit clueless sometimes. Long ago, people started figuring out ways to give clearer instructions to these robots, so they could get better answers. That's how Prompt Engineering was born! Over time, people have been making Prompt Engineering better and better, like teaching your robot friend more tricks to give even cooler answers.

The history of Prompt Engineering traces back to the evolution of natural language processing (NLP) and the development of AI models capable of understanding and generating human-like text. Prompt Engineering has emerged as a crucial methodology for guiding AI models in generating desired outputs by crafting effective prompts tailored to specific tasks or domains. Let's explore the history of Prompt Engineering in detail:

Early Developments in Natural Language Processing (NLP)

- 1950s-1980s: The early decades of NLP research focused on rule-based approaches and handcrafted linguistic features for tasks such as machine translation and information retrieval. These approaches relied heavily on human-designed rules and lacked the scalability and flexibility of modern machine learning methods.

Rise of Statistical and Machine Learning Methods

- 1990s-2000s: The advent of statistical and machine learning methods revolutionized NLP research, enabling the development of data-driven approaches for tasks like language modeling, part-of-speech tagging, and syntactic parsing. Researchers began experimenting with probabilistic models, neural networks, and statistical techniques to analyze and generate natural language text.

Emergence of Pre-trained Language Models

- 2010s: The 2010s witnessed significant advancements in deep learning and the rise of large-scale pre-trained language models. Models like Word2Vec, GloVe, and ELMo demonstrated the effectiveness of unsupervised pre-training for capturing semantic representations of words and sentences. These models paved the way for more sophisticated approaches to language understanding and generation.

Pre-trained Transformers and Prompt-based Learning

- 2018-2020: The introduction of Transformer-based architectures, particularly the release of the BERT (Bidirectional Encoder Representations from Transformers) model by Google in 2018, marked a turning point in NLP research. Pre-trained Transformers demonstrated state-of-the-art performance across a wide range of NLP tasks by leveraging large-scale unsupervised pre-training followed by task-specific fine-tuning.

- 2020-2021: Researchers began exploring the concept of prompt-based learning, where AI models are guided to perform specific tasks using carefully crafted prompts. Techniques such as few-shot learning, prompt tuning, and prompt programming emerged as effective strategies for controlling and customizing the behavior of pre-trained Transformers to produce desired outputs.

Evolution of Prompt Engineering

- 2020-present: Prompt Engineering emerged as a key methodology for controlling and directing the behavior of AI models in generating text-based outputs. Researchers and practitioners recognized the importance of crafting effective prompts to guide AI models in understanding user intents, completing tasks, and generating responses. Prompt Engineering techniques encompass strategies for clarity, specificity, contextuality, and multimodality, aimed at optimizing the performance and usability of AI models across diverse applications and domains.

Applications and Impact

- 2020-present: Prompt Engineering has found applications in a wide range of domains, including natural language understanding, conversational AI, content generation, recommendation systems, and more. By leveraging Prompt Engineering techniques, developers and researchers can tailor AI models to specific tasks, improve user interactions, and address real-world challenges in areas such as healthcare, education, finance, and entertainment.

Ongoing Research and Future Directions

- 2021 and beyond: Ongoing research in Prompt Engineering continues to explore new techniques and methodologies for enhancing the capabilities of AI models in understanding and generating human-like text. Future directions include advancing multimodal prompt engineering, addressing biases and ethical considerations, improving interpretability and controllability of AI models, and extending Prompt Engineering to novel applications and domains.

Key Concepts in Prompt Engineering

Think of Prompt Engineering as the secret language between us and the AI models. We have to speak their language to get the best results! So, we'll learn about things like "prompts," which are like the special sentences we tell the AI models to get the right answers. We'll also learn about "language models," which are the super-smart programs that understand our prompts and give us the answers we want.

Let's delve into the key concepts in Prompt Engineering in detail:

1. Clarity

Explanation: Clarity refers to the clear and unambiguous communication of the user's intent or query in the prompt. Clear prompts help AI models understand the task or question at hand, reducing ambiguity and improving the accuracy of generated responses.

Techniques:

- Specificity: Provide precise details or parameters in the prompt to narrow down the scope of the request.

- Conciseness: Keep prompts concise and to the point to avoid confusion or misunderstanding.

- Avoid Ambiguity: Phrase prompts in a way that leaves little room for interpretation or ambiguity.

Example:

- Unclear Prompt: "Find a restaurant."

- Clear Prompt: "Recommend a vegetarian-friendly Italian restaurant in downtown Manhattan."

2. Specificity

Explanation: Specificity involves providing precise details or parameters in the prompt to guide the AI model in generating tailored responses. Specific prompts enable AI models to produce more relevant and targeted outputs.

Techniques:

- Detailing Requirements: Include specific requirements or criteria in the prompt to guide the AI model's response.

- Setting Constraints: Define constraints or limitations to narrow down the range of possible outputs.

- Providing Examples: Offer examples or context to illustrate the type of response expected from the AI model.

Example:

- General Prompt: "Find a movie."

- Specific Prompt: "Recommend a classic romantic comedy film released in the 1990s."

3. Contextuality

Explanation: Contextuality involves embedding relevant context or background information in the prompt to enhance the AI model's understanding of the user's intent. Contextual prompts provide additional cues or constraints to guide the AI model in interpreting the request accurately.

Techniques:

- Adding Context: Include additional information or context relevant to the task or query.

- Providing Examples: Offer examples or scenarios to provide context for the AI model.

- Considering User Preferences: Incorporate user preferences or past interactions to personalize prompts.

Example:

- Basic Prompt: "Weather forecast."

- Contextual Prompt: "What's the weather forecast for tomorrow's outdoor wedding ceremony?"

4. Framing the Request

Explanation: Framing the request involves structuring the prompt in a way that conveys the user's intent clearly and directs the AI model's attention to specific aspects or requirements of the task.

Techniques:

- Posing Questions: Frame prompts as questions to guide the AI model's response.

- Expressing Preferences: Use language that expresses preferences or requirements to shape the AI model's output.

- Providing Instructions: Offer clear instructions or directives to guide the AI model's behavior.

Example:

- Basic Prompt: "Translate."

- Framed Prompt: "Translate the following sentence from English to French: 'Where is the nearest train station?'"

5. Language Style Guidelines

Explanation: Language style guidelines dictate the tone, style, and voice of prompts to ensure consistency and coherence in generated outputs. Adhering to predefined style guidelines helps maintain brand identity and user experience across different interactions with AI models.

Techniques:

- Defining Style Parameters: Establish parameters for tone, style, and voice to guide prompt creation.

- Consistency: Ensure consistency in language style across different prompts and interactions.

- Tailoring to Audience: Customize language style guidelines based on the target audience or domain.

Example:

- Generic Prompt: "Create a social media post."

- Guided Prompt: "Craft a lighthearted and engaging social media post promoting our new product launch."

By incorporating these key concepts into prompt engineering practices, developers and researchers can optimize the effectiveness of AI models in understanding and responding to user inputs, leading to more accurate, relevant, and personalized interactions.

Generating text: exploring various decoding techniques for language generation with Transformers

Generating text using Transformers involves several decoding methods, each with its unique characteristics and applications. Here's an overview of the most common decoding methods used in language generation with Transformers:

1. Greedy Decoding

Greedy decoding is the simplest method where the model always selects the token with the highest probability at each step. This approach is fast but can lead to repetitive and suboptimal text generation.

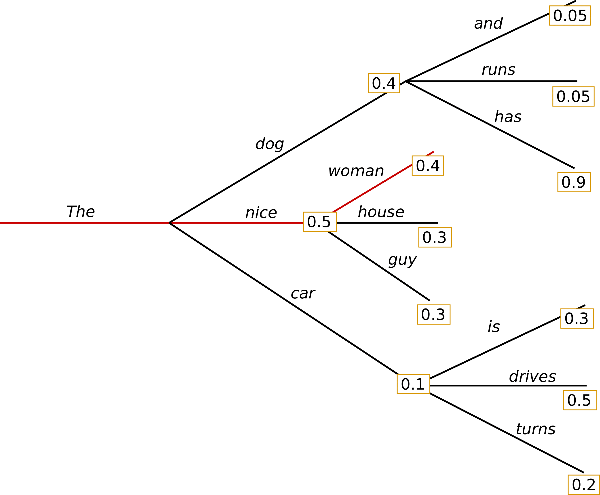

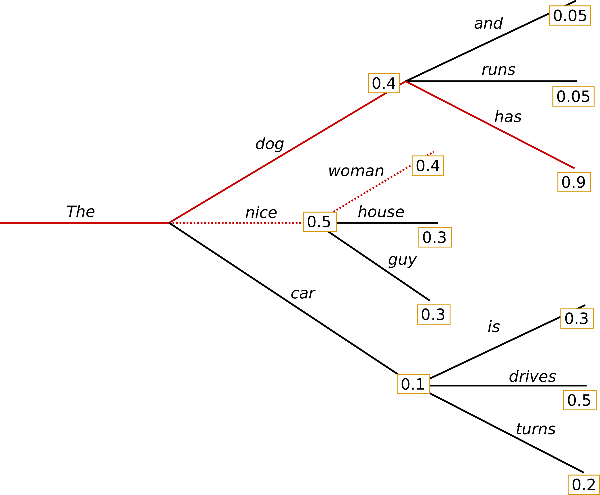

Starting from the word "The", the model selects the next word with the highest probability, "nice", and continues in this manner to generate the word sequence ("The", "nice", "woman") with a combined probability of 0.5 x 0.4 = 0.2.

Next, we will use GPT2 to generate word sequences based on the context ("I", "enjoy", "walking", "with", "my", "cute", "dog").

Steps:

- Start with a given prompt.

- For each position in the sequence, select the token with the highest probability.

- Append the selected token to the sequence and repeat until an end-of-sequence token is generated or the desired length is reached.

Pros:

- Fast and straightforward.

- Easy to implement.

Cons:

- Can miss out on better sequences that have slightly lower probability tokens early on.

- Often results in repetitive or boring text.

2. Beam Search

Beam search maintains multiple sequences (beams) at each step and selects the top ones based on cumulative probabilities. This allows the model to explore multiple potential sequences and choose the most likely ones.

During the first time step, in addition to the most probable hypothesis ("The","nice")("The","nice"), beam search also considers the second most likely one ("The","dog")("The","dog"). By the second time step, beam search determines that the word sequence ("The","dog","has")("The","dog","has") has a higher probability of 0.36 compared to ("The","nice","woman")("The","nice","woman") with a probability of 0.20. It successfully identifies the most likely word sequence in our simple example!

Steps:

- Start with a given prompt and initialize a set of beams.

- At each step, expand each beam by generating all possible next tokens.

- Keep only the top k beams (where k is the beam width) based on their cumulative probabilities.

- Continue until all beams reach an end-of-sequence token or the desired length.

Pros:

- More likely to find the most probable sequence compared to greedy decoding.

- Can produce more coherent and diverse text.

Cons:

- Computationally expensive.

- May still miss high-quality sequences due to fixed beam width.

3. Top-k Sampling

Top-k sampling introduces randomness by sampling from the top k most probable tokens at each step instead of always choosing the highest probability token.

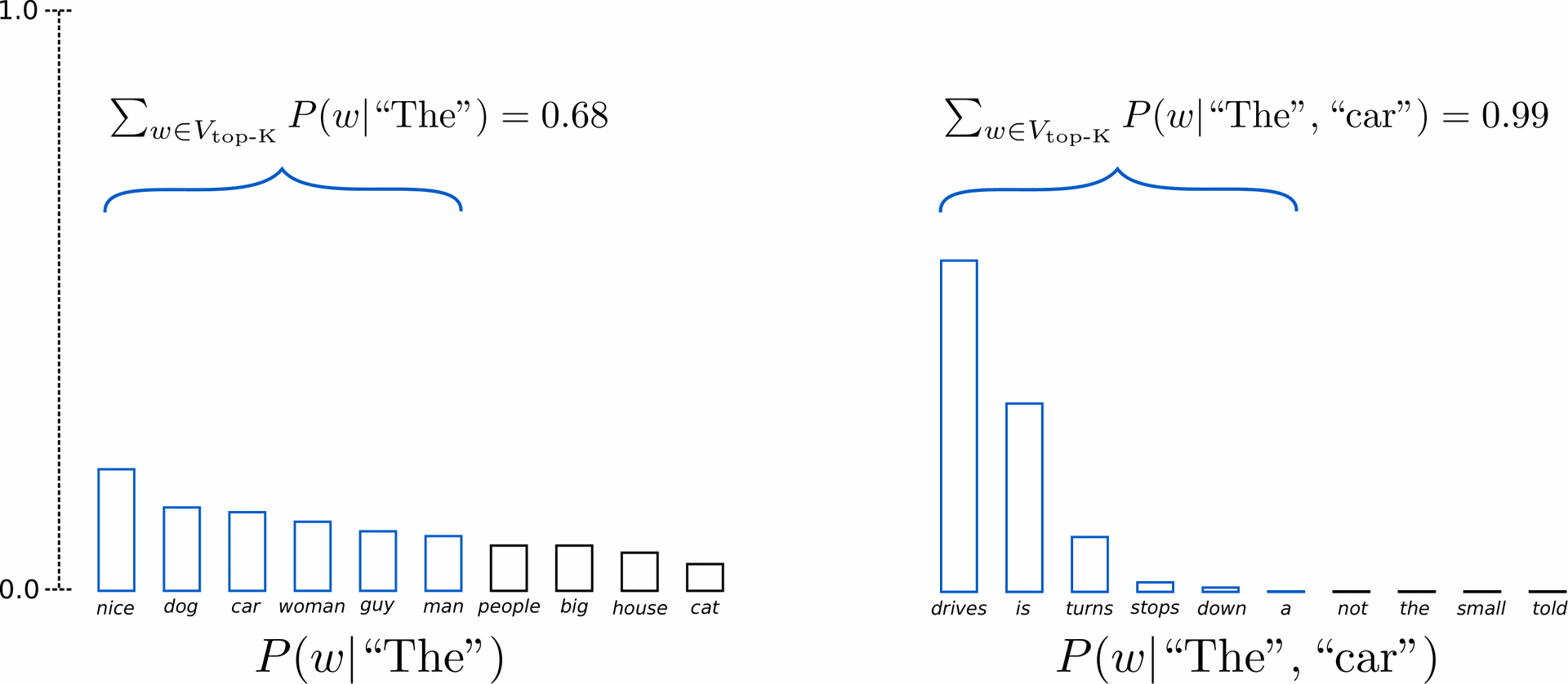

With K set to 6, we narrow down our sampling pool to just 6 words. The top 6 most likely words, known as Vtop-K, cover around two-thirds of the total probability mass in the first step, but nearly all of it in the second step. This approach effectively filters out odd choices like "not," "the," "small," and "told" in the second sampling round.

Steps:

- Start with a given prompt.

- At each step, consider only the top k tokens with the highest probabilities.

- Sample one token from this subset based on their normalized probabilities.

- Append the selected token to the sequence and repeat until an end-of-sequence token is generated or the desired length is reached.

Pros:

- Reduces repetition and can generate more diverse text.

- Balances between quality and randomness.

Cons:

- The choice of k significantly affects the output quality and diversity.

4. Top-p (Nucleus) Sampling

Top-p sampling dynamically chooses the smallest set of tokens whose cumulative probability is at least p. This method adapts the number of considered tokens based on the distribution's shape.

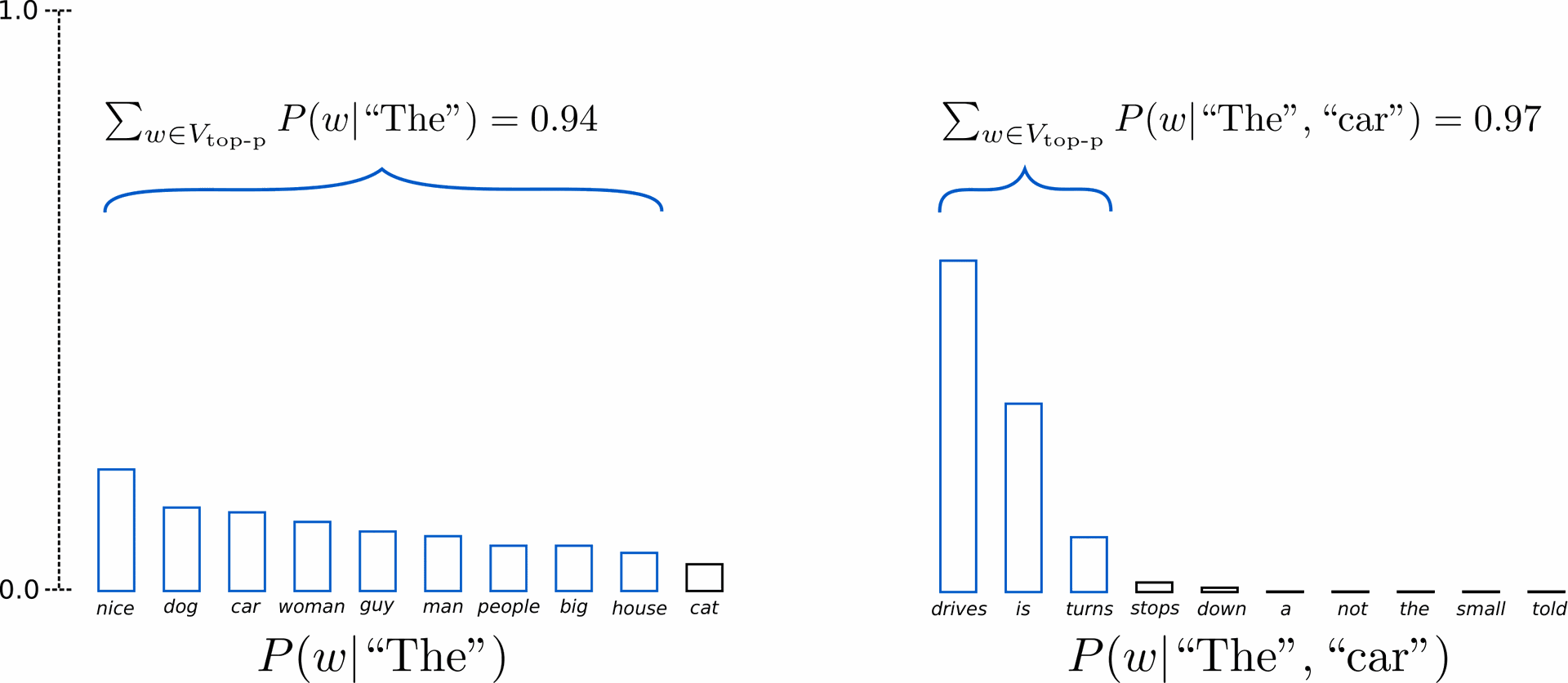

Assuming P=0.92p=0.92, Top-p sampling selects the minimum number of words needed to collectively exceed P=92%p=92% of the probability mass, known as Ktop-pVtop-p. In the initial scenario, this encompassed the 9 most probable words, whereas in the subsequent scenario, only the top 3 words need to be chosen to surpass 92%. Surprisingly straightforward! It appears to maintain a diverse selection of words when the predictability of the next word is lower, such as P(w∣"The”)P(w∣"The”), and a more limited set of words when the predictability is higher, for instance, P(w∣"The","car")P(w∣"The","car").

Steps:

- Start with a given prompt.

- At each step, sort the tokens by probability and select the smallest subset whose cumulative probability is at least p.

- Sample one token from this subset based on their normalized probabilities.

- Append the selected token to the sequence and repeat until an end-of-sequence token is generated or the desired length is reached.

Pros:

- Adapts to different probability distributions, often leading to more coherent and contextually appropriate text.

- Combines the benefits of diversity and quality.

Cons:

- More complex to implement than top-k sampling.

5. Temperature Sampling

Temperature sampling adjusts the probabilities of tokens by scaling them using a temperature parameter before applying softmax. Lower temperatures make the distribution sharper (more deterministic), while higher temperatures make it flatter (more random).

Steps:

- Start with a given prompt.

- At each step, divide the logits (raw scores) of the tokens by the temperature.

- Apply softmax to obtain the probabilities.

- Sample one token from this adjusted distribution.

- Append the selected token to the sequence and repeat until an end-of-sequence token is generated or the desired length is reached.

Pros:

- Allows fine-tuning the randomness of the generated text.

- Can produce a wide range of outputs from deterministic to highly random.

Cons:

- Needs careful tuning of the temperature parameter.

6. Combination Methods

Often, these methods are combined to leverage their respective strengths. For example, one might use top-p sampling with a certain temperature setting to balance diversity and coherence.

Example Combination:

- Use temperature sampling with a temperature of 0.7.

- Within the adjusted probabilities, apply top-p sampling with p=0.9.

Each decoding method has its trade-offs between quality, diversity, and computational cost. The choice of method depends on the specific requirements of the application, such as the desired level of creativity, coherence, and speed. By experimenting with these methods and their parameters, you can tailor the text generation process to best fit your needs.

Therefore we reach at , Conclusion :

Real-life Example 1 : Ordering Pizza with Prompt Engineering

Imagine you're ordering pizza from a robot chef, and you want to use Prompt Engineering to get your perfect pizza. You tell the robot chef, "Make me a delicious pizza, please!" But the robot chef doesn't know what toppings you like. So, you use Prompt Engineering to be more specific. You say, "I want a pizza with pepperoni, mushrooms, and extra cheese."

Here's how it relates to Prompt Engineering:

- What is Prompt Engineering?: It's like telling the robot chef exactly what you want on your pizza so it can make it just right.

- History of Prompt Engineering: People have been learning how to give better instructions to robots (or AI models) for a long time, just like how you learned to give better instructions to your robot chef.

- Key Concepts in Prompt Engineering: You learn how to craft your request (or prompt) in a way that the robot chef understands, using the right words and details, so it can make your pizza exactly as you want it.

So, Prompt Engineering is like being a pizza-making pro, but instead of dough and toppings, you're crafting sentences to get the best results from AI models!

Real-life Example 2 : Ordering Pizza with Prompt Engineering

Now, let's say the robot chef is super smart but a bit forgetful. You ordered your favorite pizza last time, but it doesn't remember your usual toppings. Here's where Prompt Engineering comes in handy:

- Crafting the Prompt: Instead of just saying, "I want a pizza," you use Prompt Engineering to be more specific. You say, "Hey robot chef, remember that delicious pizza I had last time? I want the same one, please!" By giving it a reminder of what you liked before, you help the robot chef understand exactly what you want this time.

- Understanding Your Preferences: The robot chef, using its AI brain, remembers your previous order and knows exactly what toppings you like. It uses that information to make your pizza just the way you love it, without you having to repeat yourself.

- Getting the Perfect Pizza: Thanks to Prompt Engineering, you get your favorite pizza without any confusion or mistakes. The robot chef understands your request perfectly and delivers the perfect pizza right to your doorstep!

So, in this example, Prompt Engineering helped you communicate with the robot chef effectively, ensuring you get exactly what you want. Just like with ordering pizza, Prompt Engineering helps us communicate clearly with AI models so they can give us the best answers or perform the right tasks. Whether it's ordering pizza or solving complex problems, Prompt Engineering helps us get the results we need!

Real-life Example 3 : Writing a Story with Prompt Engineering

Imagine you're a budding writer, and you want some help coming up with a story idea. You decide to use Prompt Engineering to get your creative juices flowing.

- Crafting the Prompt: You tell the AI model, "I want to write a thrilling adventure story set in a mysterious forest." With this prompt, you're giving the AI model a clear direction of what you're looking for—a thrilling adventure story in a mysterious forest.

- Generating Ideas: The AI model, using its vast knowledge and creativity, starts generating ideas based on your prompt. It suggests plot twists, interesting characters, and suspenseful settings—all tailored to your specific request.

- Refining the Story: You review the AI-generated ideas and decide which ones you like best. Maybe you choose to include a brave protagonist, a hidden treasure, and a plot twist involving a secret society. With these ideas in hand, you start crafting your story.

- Collaborating with the AI: Throughout the writing process, you continue to use Prompt Engineering to refine your story. Whenever you need inspiration or guidance, you ask the AI model for suggestions based on your initial prompt.

- Finalizing the Story: After several rounds of drafting and editing, you finally complete your thrilling adventure story. Thanks to Prompt Engineering, you were able to kickstart your creativity, brainstorm ideas, and refine your story, resulting in a captivating tale.

What we have learn is , Prompt Engineering serves as a creative tool that assists you in generating story ideas and guiding the writing process. By providing clear prompts to the AI model, you can leverage its capabilities to enhance your storytelling and bring your imagination to life. Just like ordering pizza or writing a story, Prompt Engineering empowers you to communicate effectively with AI models and achieve your desired outcomes.

Commenting is not enabled on this course.